Phaser 3 Development Log – w/e 14th Feb

Development of Phaser 3 is already under way. I asked Pete Baron, who is doing the core work on the new renderer to sum-up each week of development. Here is his latest report:

Phaser 3 Renderer Progress

All source and examples can be found in the phaser3 Git repo (and here is the commit log)

canvasToGl demo

Rich mentioned that being able to transfer Canvas to WebGL textures is very important for a number of use cases, so I took a stab at wrapping it in a fairly accessible API call and did a few performance tests. It seems like the bottleneck will be texture transfer, the rest of the code is relatively simple and isn’t doing a lot of stuff that will likely stall the GPU. The new demo has one Canvas texture (underneath the WebGL window) with a constantly changing background colour and a once-per-second number increment. In the WebGL window you can see the same texture transferred onto 10 separate WebGL textures, applied to pbSprites, and bouncing, scaling and rotating. This demo seems to slow down oddly when the window is not focused… I need to look into that (but it’ll be tough because it doesn’t do it when focused).

glToCanvas demo

In the same spirit, I investigated grabbing a WebGL texture and putting it into a canvas. Again this is wrapped in some simple API calls and it seems to work well. This demo adds a ‘source’ canvas (as in the previous demo) and 10 ‘destination’ canvases. The source data is transferred to the WebGL picture once per second (you can see that the smoothly changing background colour of the source is only updated in the gl texture when the number changes). Then the WebGL texture is being transferred back down to the 10 destination canvases every frame (and you can see those 10 canvases changing exactly in step with the WebGL texture). This has a couple of potential bottlenecks, including the texture transfer but also in the way things have to be set up. I’d only use this in a game if it was essential, and if I could find a good way to limit the frequency size of the transfers.

More API Investigations

I finally got around to looking at a bunch of other people’s work in the area of WebGl and graphics APIs. I was already familiar with several API projects from either using them or general research before I started this project, but I was very pleased to find quite a few new approaches. There is a new docs folder in the repo and I added API_comparison_results.txt there with a summary of what I found. I still have a couple more APIs to look into and am hopeful of finding some more cool ideas!

The pbWebGl.js file had become quite unmanageable due to size and complexity, so I took some time to clean it up. The shader programs and the texture handling are now in new files pbWebGlShaders and pbWebGlTextures and while I was copy / pasting code I made an effort to simplify the approach to both.

While researching another WebGL question I came across a reference to using WebGL points as textured objects and that lead me to adding an alternative render mode for WebGL. By using GL_POINT we avoid sending triangles or vertex data, and with some streamlining in pbSimpleLayer it was possible to present the drawing function with a list of points in exactly the correct format required. The end result is that for certain very simple sprite drawing operations, it’s possible to eliminate the second processing loop (preparing the data to be drawn by WebGL) and gain some remarkable speed advantages.

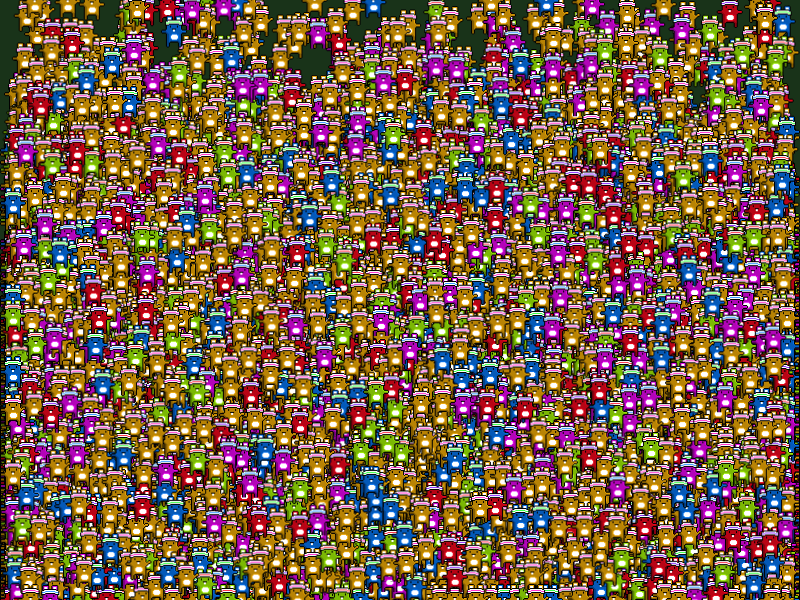

The new bunnyPoint demo illustrates this approach and reaches a quarter of a million bunnies on my desktop (I had to add a screenshot of that in docs because it’s a good milestone!)

Then I looked into adding some more functionality to the new demo and ended up with the bunnyPointAnim demo, which shows the same GL_POINT approach, but drawing from a specified texture area of a sprite sheet. This makes the approach a lot more useful (and avoids people sending tons of small textures to the GPU). That demo seems to top out at 220k bunnies so there is a small loss of speed, probably because we need to send Point and Texture Source coordinates which is twice as much data.

Several of the changes this week temporarily broke one or more demos, but I think I’ve since fixed all issues and I believe all demos are running correctly again. Drop me a line if you find anything that doesn’t appear to work correctly!

Posted on February 18th 2015 at 8:48 pm by Rich.

View more posts in Phaser 3. Follow responses via the RSS 2.0 feed.

Make yourself heard

Hire Us

All about Photon Storm and our

HTML5 game development services

Recent Posts

OurGames

Filter our Content

- ActionScript3

- Art

- Cool Links

- Demoscene

- Flash Game Dev Tips

- Game Development

- Gaming

- Geek Shopping

- HTML5

- In the Media

- Phaser

- Phaser 3

- Projects

Brain Food